Thanksgiving proved unusual for Sam Altman, OpenAI's CEO. Typically, he heads to St. Louis to visit family, but this time unfolded amidst a struggle for control of a company seen by some as holding humanity's destiny. Altman, fatigued, sought solace on his Napa Valley ranch after a hike, returning to San Francisco to connect with a board member who had fired and reinstated him within five tumultuous days. Pausing from his computer, Altman cooked vegetarian pasta, played loud music, and shared wine with his fiancé, Oliver Mulherin. Reflecting on the upheaval, Altman remarked, "This was a 10-out-of-10 crazy thing to live through."

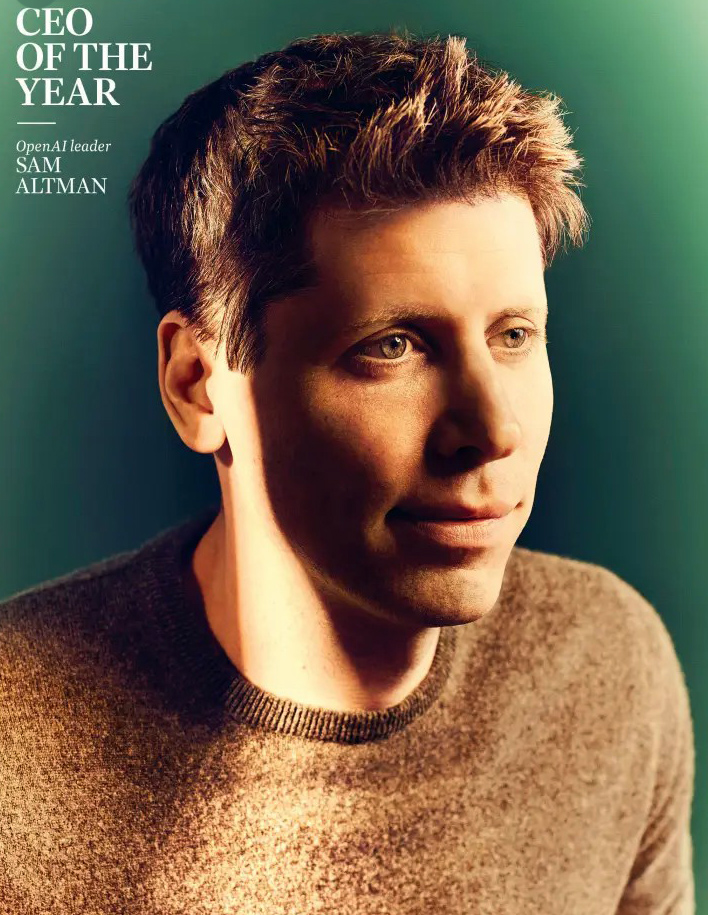

Exactly one year post the release of ChatGPT, OpenAI's CEO, Sam Altman, recalls the transformative impact of the chatbot and its successor, GPT-4, on both the company and the world. In 2023, many started taking AI seriously. OpenAI, initially a nonprofit dedicated to AI's benevolent development, skyrocketed to an $80 billion valuation. Altman, once a prominent figure in the technological revolution, faced a sudden and unexpected challenge as the company neared implosion.

On November 17, OpenAI's nonprofit board dismissed Altman without clear explanation. The ensuing corporate drama rivaled scenes from "Succession." Employee revolts and investor discontent ensued, with wild speculations, including claims of espionage. A standoff between employees and the board unfolded, with a threat of mass resignations unless Altman was reinstated. Eventually, Altman regained his position, and the board underwent a significant overhaul.

The episode left lingering questions about OpenAI and its CEO. Interviews with over 20 individuals in Altman's circle revealed a complex portrait. While praised for his affability, brilliance, and vision, Altman faced criticism for perceived slipperiness and dishonesty. Board members cited his lack of transparency and behavior as reasons for his ousting.

OpenAI declined to comment on the events, citing an ongoing independent review. Altman, in his second stint as CEO, faces uncertainty about the company's future leadership. OpenAI, having established itself as a frontrunner, anticipates releasing more capable models. However, challenges loom as the tech industry experiences hype cycles and increased competition in AI research.

The standoff at OpenAI underscores the belief that a new world, driven by rapidly advancing AI, is imminent. Altman envisions achieving Artificial General Intelligence (AGI) in the next four or five years, heralding potential global economic transformation and unprecedented advancements in various fields. The stakes are high, reflecting the urgency and significance of overseeing a company at the forefront of revolutionary technology.

Explore Further: The Transformative Impact of the AI Arms Race

Nevertheless, this advancement comes with significant risks that deeply concern many observers. The rapid progress in AI capabilities over the past year raises alarms, particularly in addressing the "alignment problem" within the industry. This problem involves ensuring that Artificial General Intelligence (AGI) aligns with human values, a challenge yet to be resolved by computer scientists. Disagreements persist on who should determine these values. Altman and others have underscored the potential "existential" risks associated with advanced AI, comparable to pandemics and nuclear war. Against this backdrop, OpenAI's board concluded that its CEO, Altman, could not be trusted. Daniel Colson, executive director of the Artificial Intelligence Policy Institute, emphasizes that stakeholders are now playing for keeps due to the perceived urgency in redirecting the trajectory of AI development.

In a bright morning setting in early November, Altman appears apprehensive backstage at OpenAI's inaugural developer conference. Dressed in a gray sweater and vibrant Adidas Lego sneakers, he acknowledges his discomfort with public speaking. Altman's roots trace back to Friday nights as a high school student, immersed in the world of an original Bondi Blue iMac. Raised in a middle-class Jewish family in St. Louis, Altman, the eldest of four siblings, emerged as a self-assured, nerdy figure. His journey into entrepreneurship began when he dropped out of Stanford after two years to co-found Loopt, a location-based social network.

Loopt became one of the first companies to join Y Combinator, where Altman's strategic talent and persistence impressed co-founder Paul Graham. Altman's presidency at YC expanded its focus beyond software to include "hard tech." His strategic vision aimed to tackle challenges where technology might not yet be feasible but could unlock substantial value. Altman's engagement extended to hard-tech startups like Helion, a nuclear-fusion company, demonstrating his commitment with substantial personal investments.

Altman's ambitions extend to diverse fields, including investing $180 million in Retro Biosciences, a longevity startup, and contributing to Worldcoin, a biometric-identification system with a cryptocurrency component. Through OpenAI, he allocated $10 million to a universal basic income (UBI) study, addressing the economic implications expected from AI advancements. Altman's political interests are evident in his exploration of running for governor of California in 2017, driven by concerns about the direction of the country under Donald Trump. While he ultimately decided against a political career, Altman remains involved in political discussions, advocating for a land-value tax in recent meetings with world leaders.

Inquired during a stroll through OpenAI's headquarters about his overarching vision guiding various investments and interests, Altman succinctly states, "Abundance. That’s it." His investments in fusion and superintelligence, he asserts, form the foundation for a more egalitarian and prosperous future. Altman believes that achieving abundant intelligence and energy will have a profound positive impact on people, surpassing any other conceivable contributions.

Altman's serious contemplation of Artificial General Intelligence (AGI) dates back nearly a decade, a period when such endeavors were considered "career suicide." Collaborating with Elon Musk, both shared concerns about the inevitability and potential dangers of smarter-than-human machines built for profit. They envisioned OpenAI as a nonprofit AI lab, serving as an ethical counterweight to corporate-driven technology, ensuring benefits for humanity at large.

In 2015, Altman initiated discussions with key figures like Ilya Sutskever, a renowned machine-learning researcher. Their collaboration led to the formation of OpenAI in December 2015, supported by $1 billion in donations from influential investors. Initially, Altman remained involved from a distance, with no designated CEO. OpenAI's early years, spent in a converted luggage factory in San Francisco, saw brilliant minds exploring ideas, albeit with a sense of uncertainty.

The year 2018 marked a pivotal moment with the introduction of OpenAI's charter, outlining values to guide AGI development. The document reflected a delicate balance between safety and speed, emphasizing the necessity of winning the race for positive societal integration. Sutskever's commitment to neural networks played a crucial role, with vast computing power and funding becoming essential. Facing financial challenges in 2019, Altman, then running YC, explored various funding options, ultimately deciding on a "capped profit" subsidiary with a nonprofit board to govern the organization.

In 2019, Altman transitioned to OpenAI's full-time CEO role, recognizing the lab's need for proper leadership. Despite the unconventional restructuring, Altman contends it was the "least bad idea," allowing cash infusion from investors while upholding a commitment to responsible AI development. The move successfully attracted $1 billion from Microsoft, later expanding to $13 billion. However, the restructuring and partnership with Microsoft altered OpenAI's dynamics, prompting concerns about a shift toward a more traditional tech company model. Some employees worried about the impact on the organization's original nonprofit ethos.

Microsoft's infusion of capital greatly enhanced OpenAI's capacity to scale its systems, while Google's "transformer" innovation significantly increased neural networks' efficiency in pattern recognition. The GPT series, starting with GPT-1 and culminating in GPT-3, showcased remarkable improvements with each iteration. Altman reminisces about a pivotal moment in 2019 when experiments into "scaling laws" revealed the profound possibilities of AGI. The smooth, exponential curves indicated that AGI might arrive sooner than anticipated, marking a transformative realization for the researchers at OpenAI.

This realization prompted a shift in OpenAI's technology release strategy. Despite abandoning the initial principle of openness, the company adopted "iterative deployment," gradually sharing its tools with a wider audience. This approach allowed OpenAI to collect data on AI usage, enhance safety mechanisms, and acclimate the public to the technology's impact. While successful, some viewed this strategy as potentially fueling a dangerous AI arms race and compromising safety priorities. The decision led to the departure of seven dissenting staffers, who founded a rival lab called Anthropic.

In 2021, OpenAI completed work on GPT-4, contemplating its release alongside a basic, user-friendly chat interface. Altman, considering the potential impact, proposed introducing the chatbot with GPT-3.5 first, followed by GPT-4 a few months later. However, decisions regarding this launch deviated from OpenAI's usual deliberative process, with Altman swiftly approving the plan. The resulting product, ChatGPT, unexpectedly attracted over 1 million users in five days and now boasts 100 million users.

OpenAI's success in 2022 was unprecedented, generating $100 million in monthly revenue and attracting billions in funding. The release of GPT-4 surpassed expectations, solidifying OpenAI's position as a frontrunner in the AI field. Altman's sudden global stardom was marked by engagements with lawmakers, a world tour, and numerous high-profile events. OpenAI's achievements spurred policy discussions on AI safety at international levels.

As Altman confidently addressed OpenAI's developer conference in November, the company seemed unstoppable. Plans for autonomous AI "agents" were announced, emphasizing a future where AI could act on behalf of users. Despite debates on the risks of AI, Altman expressed optimism, attributing increased awareness to OpenAI's deployment strategy. While discussions on AI's potential impact on civilization continue, Altman's current concern revolves around an urban coyote near his San Francisco home, illustrating the unexpected challenges that come with transformative success.

As Altman exuded confidence, disquiet brewed within his board of directors, which had dwindled from nine members to six. Comprising three OpenAI employees—Altman, Sutskever, and Brockman—and three independent directors, the panel faced internal conflicts on how to replace departing members. Over time, concerns about Altman's conduct surfaced among the independent directors and Sutskever, who noticed his tendency to manipulate situations to achieve desired outcomes and control information flow.

One noteworthy incident involved Altman's attempt to remove Tasha Toner from the board after she published a critical academic paper on OpenAI's safety efforts. While this event didn't directly prompt Altman's firing, it exemplified his actions undermining good governance, contributing to the board's loss of trust in him.

Altman's termination, announced abruptly on Nov. 16, cited his inconsistent communication with the board hindering its responsibilities. Locked out of his computer, Altman immediately planned to start a new company. However, the board underestimated the internal backlash, with employees threatening to quit unless Altman was reinstated. Negotiations ensued for nearly 48 hours, culminating in the appointment of Emmett Shear as interim CEO and Altman's return to Microsoft to initiate a new advanced AI unit.

While the board secured some concessions, such as an independent investigation into Altman's conduct, Altman and Brockman did not regain their seats. Altman acknowledged the misunderstandings and welcomed the review of recent events. OpenAI's leadership expressed alignment and optimism for a second chance, but significant changes, including a board overhaul, are expected.

The episode underscored challenges in AI governance, prompting reflection on how decisions with far-reaching consequences are made. Altman, back in the CEO's chair, aims to stabilize the company, support research areas, and contribute to improved governance, acknowledging the need for scrutiny and public accountability. The ordeal has positioned Altman as a key figure in AI development, emphasizing the collective responsibility involved in shaping superintelligence.

As Altman projected confidence, a sense of unease pervaded his board of directors, which had dwindled from nine members to six. This left a panel comprising three OpenAI employees—Altman, Sutskever, and Brockman—and three independent directors: Adam D’Angelo, CEO of Quora; Tasha McCauley, a technology entrepreneur and Rand Corp. scientist; and Helen Toner, an AI policy expert at Georgetown University’s Center for Security and Emerging Technology.

Internal discussions revealed disagreements on how to replace the departing members, fueling concerns about Altman's behavior among the independent directors and Sutskever. They observed Altman's tendency to manipulate situations and control information flow, creating a fragmented picture that hindered the board's ability to oversee the company effectively.

An illustrative incident occurred in late October when Altman sought to remove Tasha Toner from the board, misrepresenting opinions to support his case. Although this event didn't directly lead to Altman's firing, it symbolized his actions undermining good governance. The board, feeling the need to act swiftly, terminated Altman, fearing he would rally support and challenge their decision.

On the evening of Thursday, Nov. 16, Sutskever informed Altman of his firing during a Google Meet, excluding Brockman. The board's terse statement cited Altman's lack of consistent candor, hindering its ability to fulfill responsibilities and eroding confidence in his leadership.

Locked out of his computer, Altman immediately planned to start a new company, receiving overwhelming support from his network. However, the board underestimated the internal backlash, with employees threatening to quit unless Altman was reinstated. Negotiations ensued for nearly 48 hours, leading to the appointment of Emmett Shear as interim CEO, and Altman and Brockman joining Microsoft to initiate a new advanced AI unit.

The episode highlighted conflicts within the board, with legal constraints preventing the disclosure of specific details. The absence of examples for Altman's cited lack of candor fueled speculation about personal vendettas or incompetence driving the decision. Altman's firing triggered a swift response from the company's staff, threatening mass resignations.

Ultimately, the board, seeking to bring stability, appointed a new interim CEO and secured a few concessions, including an independent investigation into Altman's conduct. Altman returned, and the company's employees celebrated their reunion. The ordeal prompted a reevaluation of OpenAI's governance structure, recognizing the challenges in ensuring responsible AI development.

Altman, back in the CEO's chair, outlined priorities such as stabilizing the company, supporting research areas, and contributing to improved governance. However, the path forward remains vague, emphasizing the need for effective AI governance. Altman's role and decisions will face heightened scrutiny, highlighting the challenges posed by influential technologists making pivotal choices with broad societal implications.